Tensorflow Resources

Course on Tensorflow

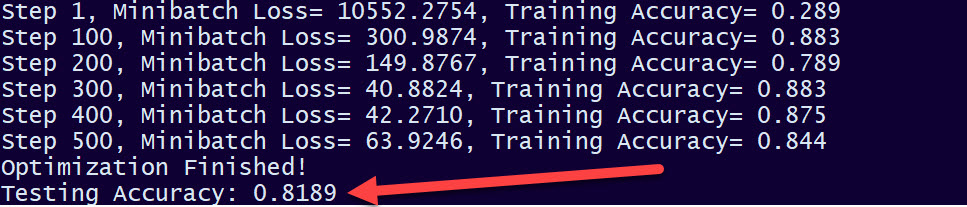

Run Tensorflow in 10 Minutes with TFLearn – TFLearn offers machine learning engineers the ability to build Tensorflow neural networks with minimal use of coding. In this course, Implementing Multi-layer Neural Networks with TFLearn, you’ll learn foundational knowledge and gain the ability to build Tensorflow neural networks. First, you’ll explore how deep learning is used to accelerate artificial intelligence. Next, you’ll discover how to build convolutional neural networks. Finally, you’ll learn how to deploy both deep and generative neural networks. When you’re finished with this course, you’ll have the skills and knowledge of deep learning needed to build the next generation of artificial intelligence.