Splunk is a popular application for analyzing machine data in the data center. What happens when Splunk Administrators want to add new data sources to their Splunk environment outside the default list?

The Administrators have two options:

- First they can import the data source using the regular expression option. Only fun if you like regular expressions.

- Second they can use a Splunk Ad-On or Application.

Let’s learn how Splunk Add-Ons are developed and how to install them.

How to Create Splunk Plugins

Developers have a couple of options to create Splunk Application or Add-Ons. Let’s step through the options for creating Splunk Add-Ons by going from the easiest to hardest.

The first option to create a Splunk Add-Ons is by using the dashboard editor inside the Splunk app. Using the dashboard editor you can create custom visualizations of your Splunk data. Simply click to add custom searches, tables, and fields. Next save the dashboard and test out the Splunk Application.

The second option developers have is to use XML or HTML markup inside the Splunk dashboard. Using either markup language gives developers more flexibility into the look and feel of their dashboards. Most developer with basic HTML, CSS, and XML skills will choose this option over the standard dashboard editor.

The last option inside the local Splunk environment is SplunkJS. Out of all the option for creating application in the local Splunk environment SplunkJS allows the greatest control for developing Splunk applications. Developer with intermediate JavaScript skills will find using SplunkJS fairly easy while those without JavaScript skills will have a more difficult time.

Finally for developers who want the most control and flexibility for their Splunk Ad-Ons Splunk offers Application SDK options. These applications leverage the Splunk API and allow for developer to write the application in their favorite language. By far using the SDK is the most difficult but also creates the ultimate Splunk Application.

Splunk Application SDK options:

What is Splunkbase

After developers create their applications they can then be uploaded to the Splunkbase. Splunkbase is the de facto marketplace for Splunk Add-Ons and applications. It’s a community driven market place for both licensed (paid) and non-licensed (not paid) Splunk Ad-Ons and Applications. Splunk certified applications ensure secure and mature Splunk Applications.

Think of Splunkbase as Apple’s App store. Users download applications that run on top of iOS to extend the functionality of the iPhone. Both the community and corporate developers build Apple’s iOS Apps. Just like the iOS App store, Splunkbase offers both paid and free applications and Ad-Ons as well.

How to install from Splunkbase

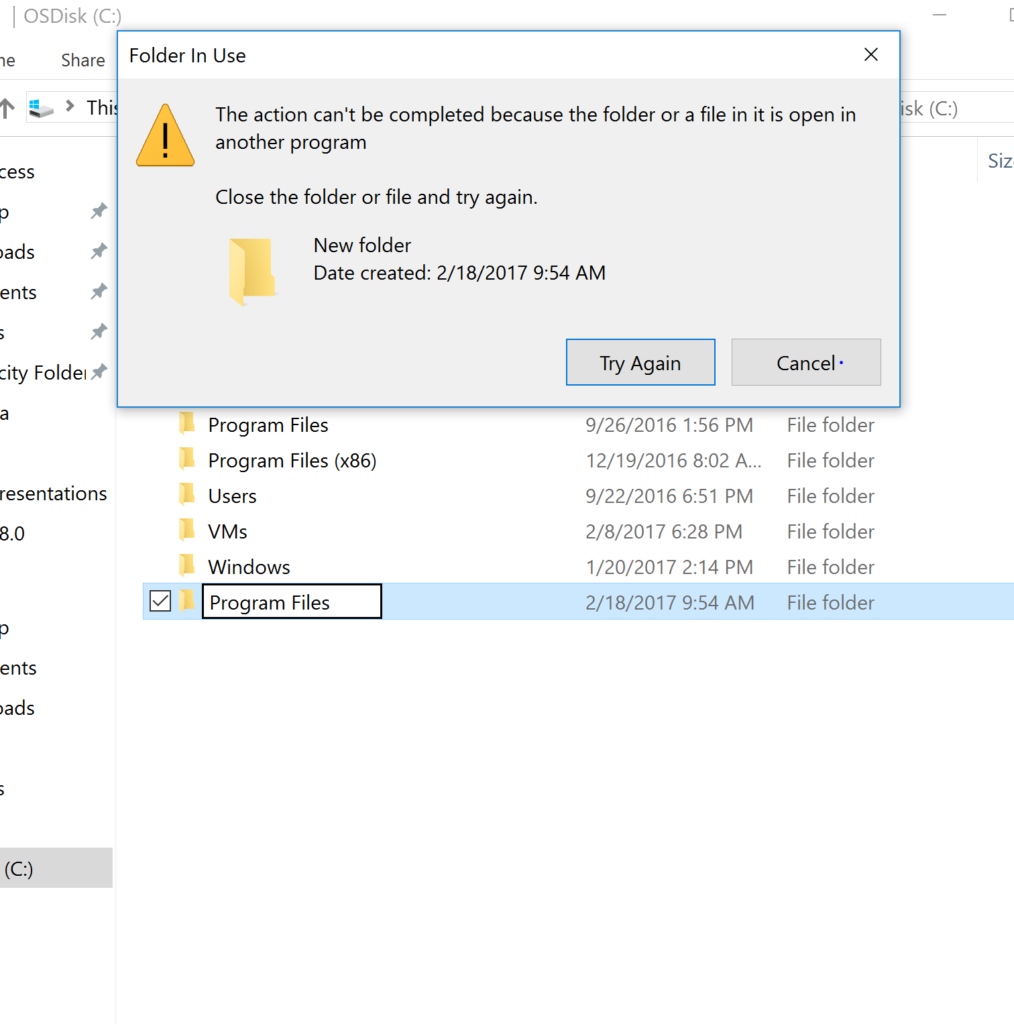

The local Splunk environment integrates with Splunkbase. Meaning Splunkbase install are seamless. Let’s walk through a scenario below installing the Splunk Analytics for Hadoop in my local Splunk environment.

Steps for Installing App from Splunkbase:

- First log into local Splunk environment

- Second click Splunk Apps

- Next browse for “Splunk Analytics for Hadoop”

- Click Install & enter log in information

- Finally view App to begin using App

Another option is to install directly to the local Splunk environment. Simply download application directly and upload to local Splunk environment. Make sure to practice good Splunk hygiene by only downloading trusted Splunk Apps.

Closing thoughts on Splunk Apps & Add-Ons

In addition to extending Splunk, Add-Ons increase the Splunk environment’s use cases. The problem with Splunk is as user begin using they want to add new data sources. While often the new data sources are supported, times when data sources aren’t default Splunk’s community of App developers fill that gap. Splunk’s hockey adoption comes from the ability to add new data sources. New insights are constantly pushing new data sources in Splunk.

Looking to learn more about Splunk? Checkout my Pluralsight Course Analyzing Machine Data with Splunk.