To say Streaming Analytics is popular is an understatement. Right now Streaming Engineering is a top skill Data Engineers must understand. There are a lot of options and development stacks when it comes to analyzing data in a streaming architecture. Today I sat down with Lewis Kaneshiro (CEO & Co-founder) and Karthik Ramasamy (Co-founder) of Streamlio to get their thoughts on Streaming Analytics and Data Engineering careers.

Streamlio Opensource Stack

Streamlio is a full stack streaming solution that handles the messaging, processing, and stream storage in real-time applications. The Streamlio development stack is built primary from Heron, Pulsar, and BookKeeper. Let’s dicuss each of these opensource projects.

Heron

Heron is real-time processing engine used/incubated by Twitter. Currently Heron is going through the transition of moving into the Apache software foundation (learn more about this in the interview). Heron is at the heart of real-time analytics by processing data before the time value expires.

Pulsar

Pulsar is an Apache incubated project for distributed publishing and subscribing messaging real-time architectures. The origin of Pulsar is similar to that of many opensource big data projects in that it was used first by Yahoo.

BookKeeper

BookKeeper the scalabale, fault-tolerant, and low-latency storage service used in many development stacks. BookKeeper is under the Apache Software foundation and popular in many opensource streaming architectures.

Interview Questions

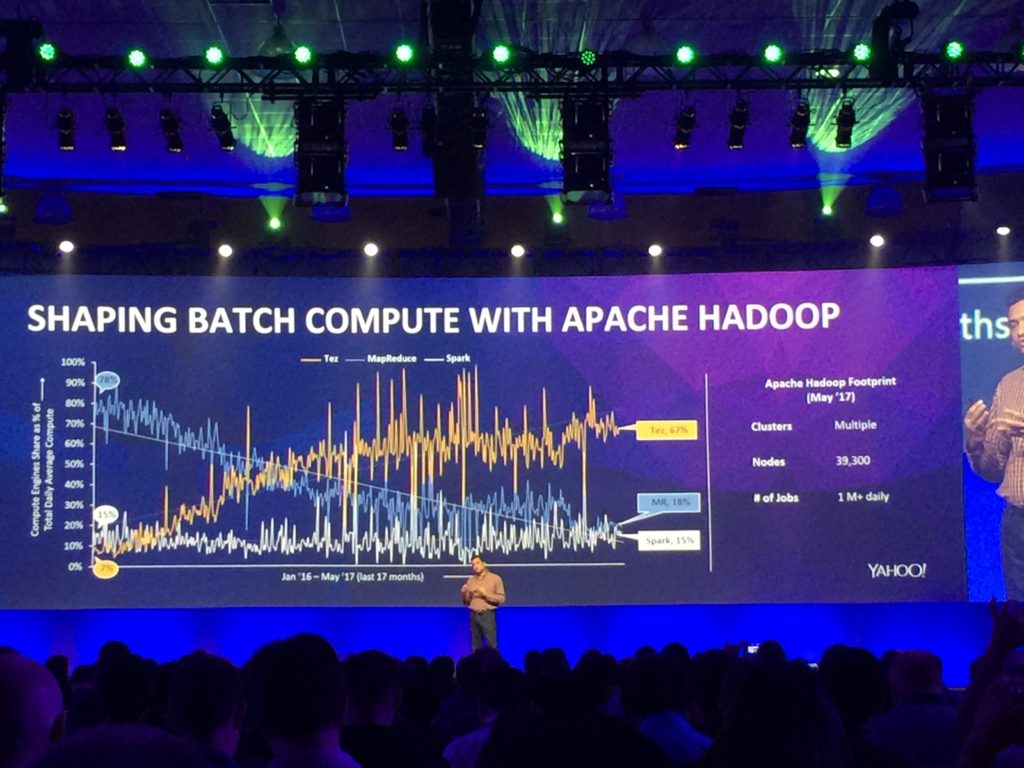

- Have we as a community accepted Hadoop related tools to be virtualized or containerized?

- How do Data Engineers get started with Streamlio?

- What are the biggest real-time Analytics use cases?

- Is the Internet Of Things (IoT) the primary driver behind the explosion in Streaming Analytics?

- What skills should new Data Engineer focus on to be amazing Data Engineers?

Checkout the interview to learn answers to these and more questions.

Video Streamlio Interview

Links from Streamlio Interview