My Journey Web Developer to Machine Learning Engineer

How can a Web Developer become a Machine Learning Engineer? It’s a journey I had quite a few years back when Hadoop became a popular platform for analyzing large data sets in a distributed environment. A lot has changed in Machine Learning since those days but many of the concepts are still the same. Tensorflow with Tensorflow.js has opened the door for machine learning to be spread out on various machines. In fact with Tensorflow.js Web developers can train machine learning or deep learning models in the browser using Javascript.

In this video I will give you 3 tips for becoming a Machine Learning Engineer with your Web Development skills. Watch the video to learn more.

Transcript – Web Developer to Machine Learning Engineer

Hi folks, Thomas Henson here with thomashenson.com, and today is another episode of Big Data Big Questions. And so, today’s question I’m gonna tackle is about Web Development to Machine Learning Engineer. So, what I wanna talk about is how do you become a machine learning engineer if you’ve got a background with web development? So, not only are we gonna talk about my journey on it, but I’m also gonna give you three tips for making that journey if you’re a web developer thinking about that. So, find out more right after this.

And, we’re back. And so, today’s question that we’re gonna tackle is about Web Development moving into Machine Learning Engineer, but before we tackle that, I wanna encourage you everybody out there. If you have any Big Data-related questions or any questions in general, just go ahead, throw them in the comment section here below or go to my website thomashenson.com/big-questions. Submit your questions there. I will answer them here on YouTube. I really love the engagement. I love being able to get out in front of the community, so just keep bringing those in. And then, if you haven’t already, go ahead and subscribe to my YouTube channel, that way, you never miss an episode of Big Data Big Questions or some of the other cool things I’m doing with interviews, book reviews, and just general technology and awesomeness, right?

So, let’s jump into the question for today. And so, today’s question comes in about how do you transition from a web developer to a machine learning engineer? So, one of the reasons behind this is, we talked about it, I had a video where we’ve done, where we talked about what the machine learning engineer is, a huge, huge hot topic area, a lot of people are looking into how they become a data engineer or a machine learning engineer. So, there’s a lot of interest there in the web development community, maybe there’s a lot of people that are out there looking and they’re saying, “Hey, I kinda wanna be on that bleeding edge. I wanna see what else is going on with this machine learning engineer, how do I get into that, is that even possible?” I’m sitting here creating maybe some C# development applications or maybe you’re a PHP developer, ASP.NET, any genre there, maybe just a general JavaScript, maybe you’re a node engineer, and you’re wanting to really branch out and look kinda what’s into that.

So, before we jump into the three tips, I’m gonna give you three tips for how you can do that. I wanna tell you that it is possible. So, my journey into Big Data, I was an ASP.NET developer and so this was a quite few years back, it was in the early days of the Hadoop community. So, I was involved and I’m starting in Hadoop 1.0. And so, for me, I was a contractor and we were coming to the end of a project that I’ve been working on for many years, and I was looking kind of trying to see what else was out there, and one of the projects that was out there was a data analytics project, and I knew that it was kinda gonna be real heavy in research and kind of on the cutting edge, so I really started looking into it. As I got more involved with it, I started learning about Hadoop and I learned about some of the things that we’re doing as a community.

And so, I really got sparked from that point, but it is possible. So, it’s something that I was able to do. There was a lot of things I had to learn and there were a lot of things that if I could go back, I would have learned first, I would have done it a little bit different. But so, my journey here now is because of all those trials and tribulations that I went to, but the cool thing is, is I got to share some of those for anybody out there that’s a web developer trying to look into it or anybody who’s just getting started. But today, I wanna focus on the web developer, so your skills are gonna translate, right? But there’s gonna be some gaps and some things that you need to do. So, if you’re a web developer looking out there and you’re saying, “Hey, how do I jump in and how do I transition into a machine learning engineer?” And so, you want to be involved with the algorithms and some of the… and the day-to-day development activities for this huge massively machine learning projects or deep learning or AI, how can you get involved?

So, my first tip, you might not like this one, but the first thing you need to do, don’t cheat and don’t do the other tips first, take two weeks 30 minutes a day and start learning about linear regression and linear algebra, and there’s a ton of free resources out there. You can go to Coursera, they have some free courses on it. You can opt-in and take the certificate to get certified. A lot of things on YouTube, so you can go through some daily training on YouTube. There’s a lot of different blog post out there. Just take 30 minutes to go through the math portion.

So, the math and statistics are gonna be one of the gaps that you’re gonna lack, and so that’s one of the things. I didn’t take any of the formal courses, I just kinda went back and started looking at some of my old college notes just trying to figure it out, but I would go through… I would. And, if you have the time, so if you don’t… go through the two weeks, watch some YouTube videos, some blog posts, go to Coursera, sign up for a course. If you’re involved in a Pluralsight or any of the other online trainings out there, find many resources there, take it and do it for two weeks. Also, these are pretty big careers. Maybe sign up for a course. So, if you’re in college right now, take a linear regression course. If you’re not in college and you have the opportunity, outseam [Phonetic] why you would wanna do something like that. So, if this is a path that you’re kinda going down and really serious about.

So, I know it’s not the most fun part of it, but I’ll promise you, it’s gonna help you down the road understanding the terminology and the math behind what we’re doing. So, even if you’re not looking to be a data scientist, so we’ve talked about it here before, data scientist versus data engineer, this is more to data engineer, but you’re still gonna wanna have that math background.

Tip number two, you wanna take two weeks, maybe three, but two weeks for sure, 30 minutes a day, so you start and see your trend here, you wanna walk through the Hadoop or Spark tutorials. And so, you want to learn and understand how to kinda do those. Do the basic tutorials. I’m not talking about setting up your own full Hadoop cluster in your own data center. I’m saying go to the tutorials with the Sandbox. There’s a ton of resources out there. I have a lot of Pluralsight Courses that’s based around having just a little bit of SQL knowledge and a little bit of Linux knowledge, and that will help you kinda go through it, but there are other resources out there, too. Obviously, I encourage you and love for you to join in and watch some of my Pluralsight Courses, but there’s a ton of resources out there. This is a huge community.

Coursera has some baseline courses. You can find things on YouTube. I’ve got a ton of free resources on my blog that you can just walk through, so if you like walking through the tutorial, grab on some of the code. I’ve got a ton of tutorials and a ton of just basic command [INAUDIBLE 00:06:40] stuff that you can do to start learning the Hadoop and Spark. So, I would just take, like I said, 30 minutes a day, not too much [INAUDIBLE 00:06:48]. You can download one in the Sandboxes whether it comes from Cloudera or from Hortonworks, they’ll have Hadoop installed with it and also have Spark. You can run through some of the basic tutorials. Ton of resources out there, like I said, I’ve got a Pluralsight Course. We have quite a few Pluralsight Courses actually around that. But take the time and go through that.

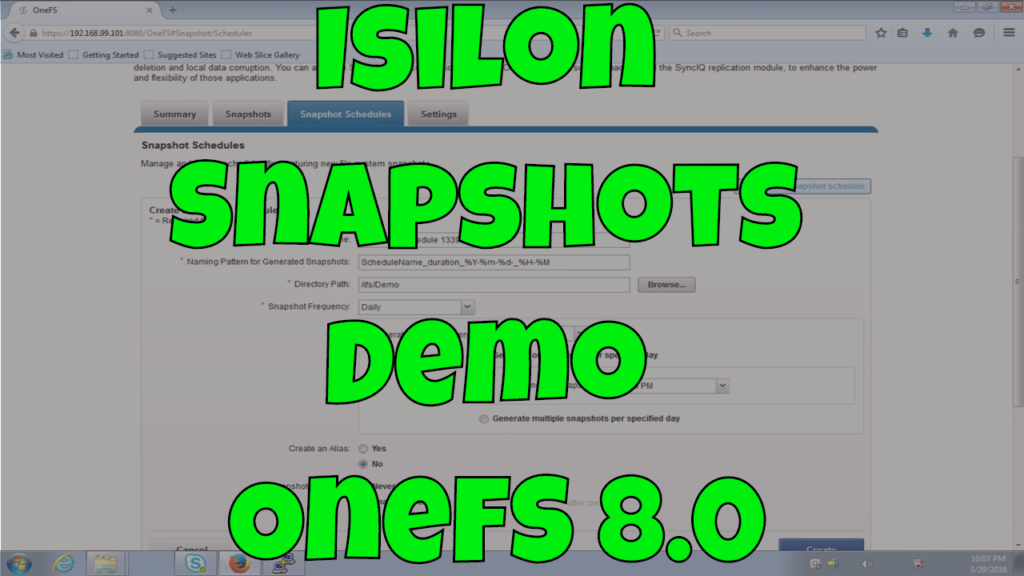

So, we’ve gone through the math portion. And now, we’ve gone through just learning the baseline of some of the bigger data stuff. Now, let’s get into some of the machine learning. So, now, you get to use your skills, your background with Javascript and I would start looking into TensorFlow.js. And so, TensorFlow.js is a machine learning in the browser. So, TensorFlow if you’re not familiar, I’ve kinda talked through it here a little bit around why it’s awesome, but TensorFlow released by Google, it’s really, really popular right now in the Big Data community especially around machine learning and deep learning. And so, there are some really cool features in it, but there’s a lot of stuff that you can do with it from the browser spectrum.

So, this is TensorFlow.js. And so, this is where your background and you get to shine. So, go through the tutorials, but don’t skip the other steps, but go through these tutorials, start playing with the Pac-Man interface that they have, do the pitch curve, so is it a fast ball or is it a curved ball, and now you’re gonna understand some of that math because you did step one first, right, you didn’t cheat. I know everybody right now is looking on the browser. “I’m going to TensorFlow.js right now. It’s a really cool resource.” But you’re gonna understand the math behind it and this is gonna get you started.

And so, now, that you’ve got the math background and you’ve also got the ability to say, “Hey, if we wanted to send [Phonetic] this up and put this in some kind of distributed system whether it be Hadoop or whether it be just understanding some of the baseline on Spark, you have that knowledge background, too.” And so, you’ve done all this probably in six weeks and it gives you an opportunity to start looking and start understanding, and maybe there are some projects internally in your organization that you can look for or maybe it’s something that you’re trying to look for further down the road. Maybe you’re in college right now looking to do it. By doing some of these steps right now, you’re really setting yourself up for success.

Well, thanks again. I hope everyone got a lot of information from this. I hope you’re going out there and learning linear algebra and you can also play that Pac-Man game on TensorFlow.js. Any questions, make sure you submit them. Until next time. Thanks.