My Tensorflow Journey

It all started last year when I accepted the challenge to take Andrew Ng’s Coursera Machine Learning Course with the Big Data Beard Team. Now here I am a year later with a new Pluralsight course diving into Tensorflow (Implementing Neural Networks with TFLearn) and writing a blog post about how to get started with Tensorflow. For years I have been involved on the Data Engineering side of Big Data Projects, but I thought it was time to take a journey to see what happens on the Data Science side of these projects. However, I will admit I didn’t start my Tensorflow journey just for the education, but I see an opportunity for those in the Hadoop ecosystem to start using the Deep Learning frameworks like Tensorflow in the near future. With all that being sad let’s jump in and learn how to get started with Tensorflow using Python!

What is Tensorflow

|

1 |

How To Get Started with Tensorflow

Now that we understand about deep learning and Tensorflow we need to get the Tensorflow framework installed. In production environments GPUs are perferred but CPUs will work for our lab. There are a couple of different options for getting Tensorflow installed my biggest suggestion for Window user is use a Docker Image or an AWS deep learning AMI . However, if you are a Linux or Mac user it’s much easier to run a pip install. Below are the commands I used to install and run Tensorflow in my Mac.

$ bash commands for install tensorflow

using env

Always checkout the official documentation at Tensorflow.

Tensorflow Hello World MNIST

from __future__ import print_function

import tensorflow as tf

a = tf.constant(‘Hello Big Data Big Questions!’)

#always have to run session to initialize variables trust me 🙂

sess = tf.Session()

#print results

print(sess.run(a))

Beyond Tensorflow Hello World with MNIST

After building out a Tensorflow Hello World let’s build a model. Our Tensorflow journey will begin by using a neural network to recognize hand written digits. In the deep learning and machine learning world the famous Hello World is to use the MNIST data set to test out training models to identify hand written digits from 0 – 9. There are thousands of examples on Github, text books, and on the official Tensorflow documentation. Let’s grab one of my favorite Github repo for Tensorflow by Americdamien.

Now as Data Engineers we need to focus on being able to run and execute this Hello World MNIST code. In a later post we can cover behind the code. Also I’ll show you how to use a Tensorflow Abstraction layer to reduce complexity.

First let’s save this code as mnist-example.py

“”” Neural Network.

A 2-Hidden Layers Fully Connected Neural Network (a.k.a Multilayer Perceptron)

implementation with TensorFlow. This example is using the MNIST database

of handwritten digits (http://yann.lecun.com/exdb/mnist/).

Links:

[MNIST Dataset](http://yann.lecun.com/exdb/mnist/).

Author: Aymeric Damien

Project: https://github.com/aymericdamien/TensorFlow-Examples/

“””

from __future__ import print_function

# Import MNIST data

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets(“/tmp/data/”, one_hot=True)

import tensorflow as tf

# Parameters

learning_rate = 0.1

num_steps = 500

batch_size = 128

display_step = 100

# Network Parameters

n_hidden_1 = 256 # 1st layer number of neurons

n_hidden_2 = 256 # 2nd layer number of neurons

num_input = 784 # MNIST data input (img shape: 28*28)

num_classes = 10 # MNIST total classes (0-9 digits)

# tf Graph input

X = tf.placeholder(“float”, [None, num_input])

Y = tf.placeholder(“float”, [None, num_classes])

# Store layers weight & bias

weights = {

‘h1’: tf.Variable(tf.random_normal([num_input, n_hidden_1])),

‘h2’: tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

‘out’: tf.Variable(tf.random_normal([n_hidden_2, num_classes]))

}

biases = {

‘b1’: tf.Variable(tf.random_normal([n_hidden_1])),

‘b2’: tf.Variable(tf.random_normal([n_hidden_2])),

‘out’: tf.Variable(tf.random_normal([num_classes]))

}

# Create model

def neural_net(x):

# Hidden fully connected layer with 256 neurons

layer_1 = tf.add(tf.matmul(x, weights[‘h1’]), biases[‘b1’])

# Hidden fully connected layer with 256 neurons

layer_2 = tf.add(tf.matmul(layer_1, weights[‘h2’]), biases[‘b2’])

# Output fully connected layer with a neuron for each class

out_layer = tf.matmul(layer_2, weights[‘out’]) + biases[‘out’]

return out_layer

# Construct model

logits = neural_net(X)

prediction = tf.nn.softmax(logits)

# Define loss and optimizer

loss_op = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

logits=logits, labels=Y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

train_op = optimizer.minimize(loss_op)

# Evaluate model

correct_pred = tf.equal(tf.argmax(prediction, 1), tf.argmax(Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

# Initialize the variables (i.e. assign their default value)

init = tf.global_variables_initializer()

# Start training

with tf.Session() as sess:

# Run the initializer

sess.run(init)

for step in range(1, num_steps+1):

batch_x, batch_y = mnist.train.next_batch(batch_size)

# Run optimization op (backprop)

sess.run(train_op, feed_dict={X: batch_x, Y: batch_y})

if step % display_step == 0 or step == 1:

# Calculate batch loss and accuracy

loss, acc = sess.run([loss_op, accuracy], feed_dict={X: batch_x,

Y: batch_y})

print(“Step ” + str(step) + “, Minibatch Loss= ” + \

“{:.4f}”.format(loss) + “, Training Accuracy= ” + \

“{:.3f}”.format(acc))

print(“Optimization Finished!”)

# Calculate accuracy for MNIST test images

print(“Testing Accuracy:”, \

sess.run(accuracy, feed_dict={X: mnist.test.images,

Y: mnist.test.labels}))

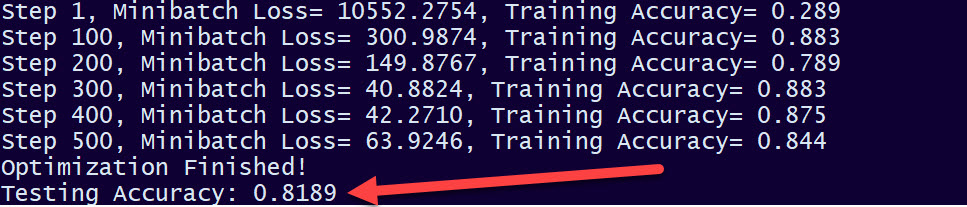

Next let’s run our MNIST example

$ python mnist-example.py

…results will begin to appear here…

Finally we have our results. We get a 81% accuracy using the sample MNIST code. Now we could better and get closer to 99% with some tuning or adding different layers but for our first data model in Tensorflow this is great. In fact in my Implementing Neural Networks with TFLearn course we walk through how to use less lines of code and get better accuracy.