What is Lambda Architecture?

Since the Spark, Storm, and other streaming processing engines entered the Hadoop ecosystem the Lambda Architecture has been the defacto architecture for Big Data with a real-time processing requirement. In this episode of Big Data Big Questions I’ll explain what the Lambda Architecture is and how developers and administrators can implement in their Big Data workflow.

Transcript

(forgive any errors text was transcribed by a machine)

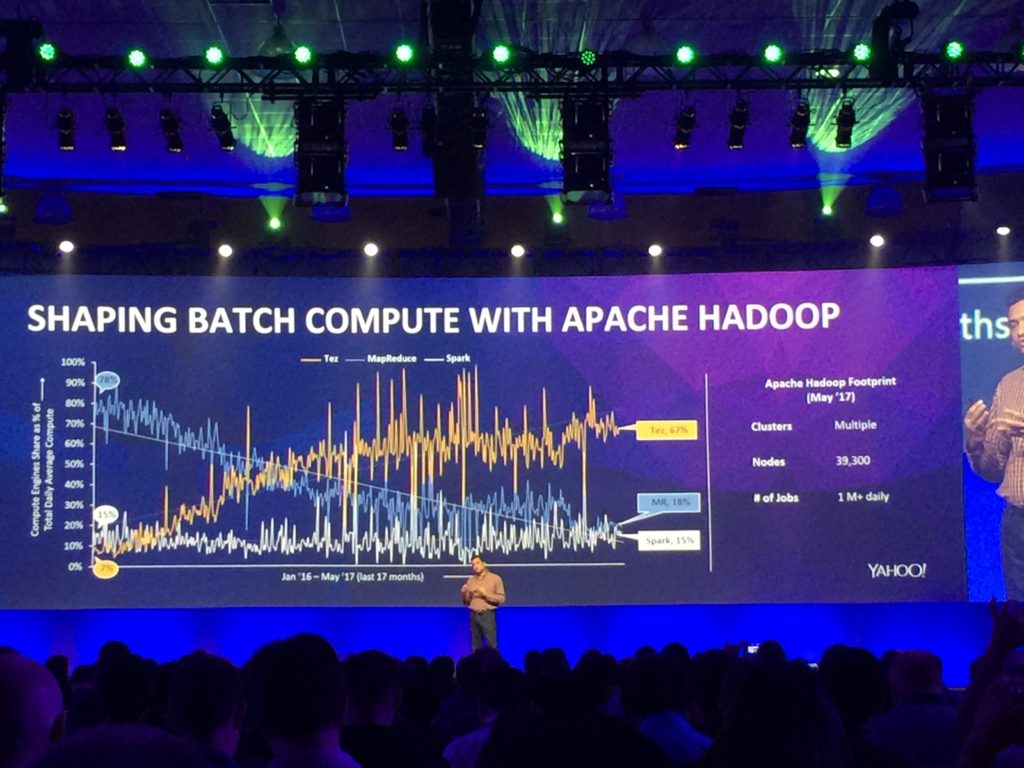

Hi folks Thomas Henson here with thomashenson.com and today is another episode of big data big questions and so today’s question is what is the lambda architecture and how does that relate to our big data and Hadoop ecosystem? Find out right after this so when we talk about the lambda architecture and how that’s implemented into do we have to go back and look at Hadoop 1.0 and 2.0 when we really didn’t have a speed layer or Spark for streaming analytics and so back in the traditional days of Hadoop 1.0 and 2.0 we were using MapReduce for most of our processing and so the way that that would work is our data would come in we would pull our data into HDFS once our data was in HDFS we would run some kind of MapReduce job so you know we need to use pig or hive or to write our own custom job or some of the other frameworks that are in the ecosystem so that was all you know mostly transactional right so all our data had to be in HDFS so we had to have a complete view of our data to be able to process it later on we started looking at it and seeing that hey we need to be able to pull data in and do it in when data is not really complete right so unless transactional when we maybe have incomplete parts of the data or the data is continuing to be updated and so that’s where spark and Flink and some of the other streaming analytics and streaming processing engines came in is that we wanted to be able to process that data as it came came in and do it a lot faster too and so we took out the need really to even put it into HDFS for when we first we’re starting to process it because that takes time to write so we wanted to be able to move our data and process it before it even hit you know our HDFS and our disconnect that whole system but we still needed to be able to process that for batch processing right so some analytics some data that we’re going to pull we want to do that in real time right but then there’s other insights like maybe some monthly reports quarterly reports that are just better for transactional right and even when we start to talk about you know how we run a process and hold on to historical data and kind of use as a traditional enterprise data warehouse but in a larger you know more Hadoop platform basis like hi presto and some of the other SQL engines that are working on top of us do and so the need came where we were having these two different you know two different systems and how we were going to process data so we started adapting the lambda architecture so both the land architecture was was as your data come in it would sit and maybe a queue so maybe you can have it sitting in Kafka or just some kind of message queue any data that needed to be pulled out and processed streaming we would take and we will process that and what would call our speed layer so we have our speed layer maybe using smart or flee to pull out some insights and push those right out to our dashboards for our data that was going to exist for battleship for the you know transactional processing and just hold them for historical data we would have our MapReduce layer so we’re all a batch and so if you think about two different prongs so you have your speed layer coming in here pulling out your insights but your data as it sits in the cube goes into HDFS and still there to you know run hide will top up or hold on for historical data or maybe to still run some MapReduce jobs and pull up to a dashboard and so what we would have is you have two pronged approach there with your speed layer being your speed letter being on top and then your bachelor being on the bottom and then so as that dated would come in you still have your data in HDFS but you’re still be able to pull your data from you know your real time processing as the data is coming in and so that’s what we started talking about when we were saying lambda architecture is just a two layer system to be able to do our MapReduce and our best job and then also a speed layer to do our streaming analytics you know whether it be through spark flee or attaching beam and some of the other pieces so it’s a really good process to know it’s and you know it’s something that’s been in the industry for quite a long time so if you’re new to the Hadoop environment definitely want to know and be able to reference it back to but there are some other architecture that we’ll talk about in some future episodes so make sure you subscribe so that you never miss an episode so go right now and subscribe so that the next time that we talk about an architecture that you don’t miss it and I’ll check back with you next time thanks folks