Explaining the Data Lake

The Enterprise space is notorious for throwing around jargon. Take Data Lake for example the term Data lake. Does it mean there is a real lake in my data center because that sounds like a horrible idea. Or is a Data Lake just my Hadoop cluster?

Data Lakes or Data Hubs have become mainstream in the past 2 years because the explosion in unstructured data. However, the one person’s Data Lake is another’s data silo. In this video I’ll put a definition and strategy around the term data lake. Watch this video to learn how to build your own Data Lake.

Transcript

Thomas Henson: Hi, I’m Thomas Henson, with thomashenson.com. And today is another episode of “Big Data, Big Questions.” So, today’s question we’re going to tackle is, “What is a data lake?” Find out more, right after this.

So, what exactly is a data lake? If you’ve ever been to a conference, or if you’ve ever heard anybody talk about big data, you’ve probably heard them use the term “data lake”. And so, if you haven’t heard them say data lake, they might have said data hub. So, what do we really mean when we talk about a data lake? Is that just what we call our Hadoop cluster? Well, yes and no, so really, what we look for when we talk about a data lake is we want an area that has all of our data that we want to analyze. And so, it can be the raw data that comes in, off of, maybe, some sensors, or it can be our transactional sales quarterly, yearly – historical reporting for our data – that we can have all in one area so that we can analyze and bring that data together.

And so, when we talk about data lake – and we really want to look for where that term data lake comes from – it comes from, actually, a blog post that was published some years ago, and… Apologize for not remembering the name, to give credit, but what they talked about is, they said that when we talk about unstructured data and data that we’re going to analyze in Hadoop, it’s really… If we look at it in the real world, it’s more like a lake. And we call it more like a lake because it’s uneven. You don’t know how much data is going to be in it, it’s not a perfect circle. If you’ve ever gone to a lake, it doesn’t have a specific shape. And in the way that data comes into it… So, you might have some underground streams, you might have some above-ground streams, you might just have some water that runs in from a rain – runoff, there, that’s all coming into it. And you compare that to what we’ve traditionally seen when we talk about our structured data that’s in our enterprise data warehouse is, if you look at that, that’s more like bottled water, right? It’s perfect, we know the exact amount that’s going to go into each bottle. It’s all packaged up, it’s been filtered, we know the source from it, and so that’s really structured. But, in the real world, data exists unstructured. And to get to that point where we can have that bottled water, we can have that structured data all put tight into one package that we can send out, you need to take it from the unstructured to the structured.

And so, this is where the concept of data lake comes in, is we talk about being able to have all this data that’s unstructured, in the form that it already exists. And so, your unstructured data is there, and it’s available for all analysts, so maybe I want to have a science experiment and test out some different models with maybe some historical data and some new data that’s coming in. And my counterpart, maybe in another division, or just from another project, can use the same data. And so, we all have access to that data, to have experiments, so that when the time comes for it to support, maybe, our enterprise dashboards or applications, we can push that data back out in a more structured form. But, until we get to that point, we really want that data to all be in one central location, so that we all have access to it. Because if you’ve ever worked in some organizations, you’ll go in, and they all have different data sets that may be the same. I mean, I’ve sat on different enterprise data boards in different corporations and different projects, just because of the fact that we all have the same data, but we may not call it all the same thing. And so, it really prohibits us being able to share the data.

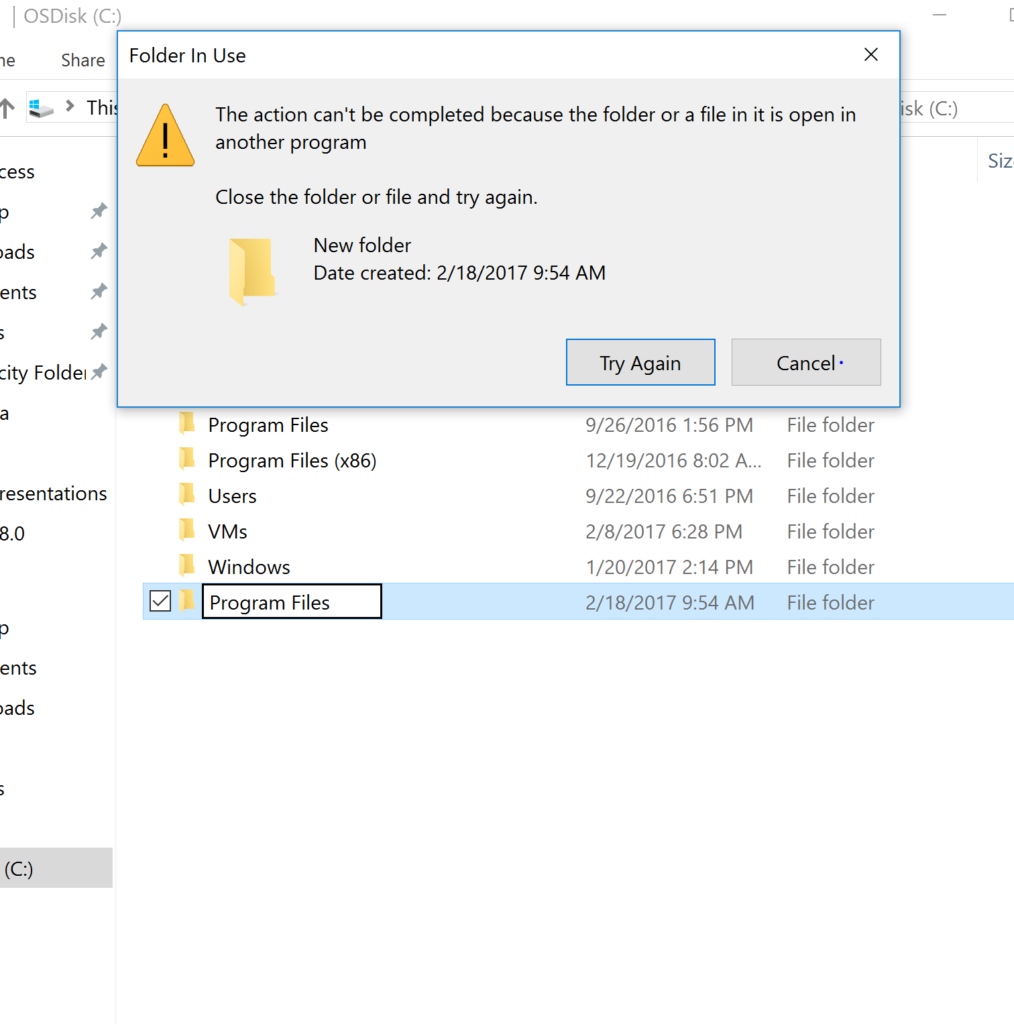

And so, from a data lake perspective, don’t just think of your data lake as your Hadoop cluster, right? You want it to be multi-protocol, you want it to have different ways for data to come in and be accessed, and you don’t want it to just be another data silo, too. And so, that’s what we look at, and that’s what we mean when we talk about data lake or data hub. That’s our analytics platform, but it’s really where our company, where our corporation data exists, and it gives us the ability to share that data as well.

So, that’s our big data big question for today. Make sure you subscribe so that you never miss an episode, and also, if you have any questions that you want me to answer, go ahead and submit them. Go to thomashenson.com, big data big questions, you can submit them there, you can find me on Twitter, you can submit your questions here on YouTube – however you want, just ask those questions and I’ll do my best to get back and answer those. Thanks again.