I honestly think developing real-time analytics is one of the hardest feats for developers to take on!

I’ll admit I’m for sure biased, but that doesn’t make me wrong.

My first project in the Hadoop eco-system was a real-time application when the Hadoop community still didn’t have real-time processing. I’ve always been honest in my posts and always will. So let me not sugarcoat this…the project sucked and was deemed a failure!

My team didn’t understand the requirements for real-time and couldn’t meet the requirements. The project was over budget and delayed. However, all was not lost, years have past and I learned a lot and the Hadoop community now has a new frameworks to speed up processing in real-time. Before developing your real-time analytics project please read these top 3 recommendations for real-time analytics! You will thank me….

What is Real-Time Analytics

Real-time analytics is the ability to analyze data as soon as it is created. Not only as soon as it’s created, but before all the data is uncovered. Traditional batch architectures have all the data in place before processing, but real-time processing is done as the data is created.

To be picky there is really no such thing as real-time analytics! What we have right now is near real-analytics which to humans is at millisecond speed or just faster than our competitors. For true real-time we would have to analyze the data at the same time it occurs and right now there is always some latency from networking, processing, etc. Let’s table this discussion until quantum computing becomes mainstream……

Take for example a GPS enabled application for brick and mortar stores. Using the phone as a sensor the application will know the customers proximity to the store. When the customer is close the store a offer is sent via the phone. Sounds simple, but imagine millions of sensors entering data into the system and trying to analyze the data location information. Add to this example for knowing store locations, store hours, local events, inventory levels, etc. Now many things could go wrong here. For example, the application could send offer for a product not in stock, send offer too late once the customer is out of range, send offer to a store that is closed.

Still think building those real-time applications is easy? Let’s look into the future…

Tsunami of Real-Time Data

How much data are we talking about for the future of real-time analytics? Gartner predicts that by 2020 worldwide we will have 20.4 billion devices connect. The predcition roughly estimates the world population of 7 billion people with an averages 3 devices per person. Sounds like a lot, however, I think it’s a conservative prediction. How many devices do you have connect in your home? I have 25 in my home and I’m not considered on the bleeding edge. I’ve talked with quite a few people who have as many as 75 plus. So let’s say 1/4 of the population has 15 devices by 2020 that will total closer to 28 plus billion devices.

Recommendations for Real-Time Analytics

Since we know why streaming processing and real-time analytics are growing at a perplexing pace, lets’ discuss recommendations for building those real-time applications.

1 – Timing is Everything

Know the time to value for the insights of the data. All data has different values assigned to it and that value degrades over time. Picture our previous example for a retailer using location services to send offers via a mobile application. How valuable is potential customer’s location? It’s really valuable, but only if the application can process the data quickly enough to send an incentive while the customer is near their physical location. Otherwise, the application is providing historical information.

After understanding the time value of the data you can find the correct framework (Flink, Spark, Storm, etc) to process the data. Most of the streaming data we need to be processed real-time for specific insights. Example pulling that user location data and time. Remember not all data is processed the same way batch vs. streaming.

2 – Make Sure Applications will Scale

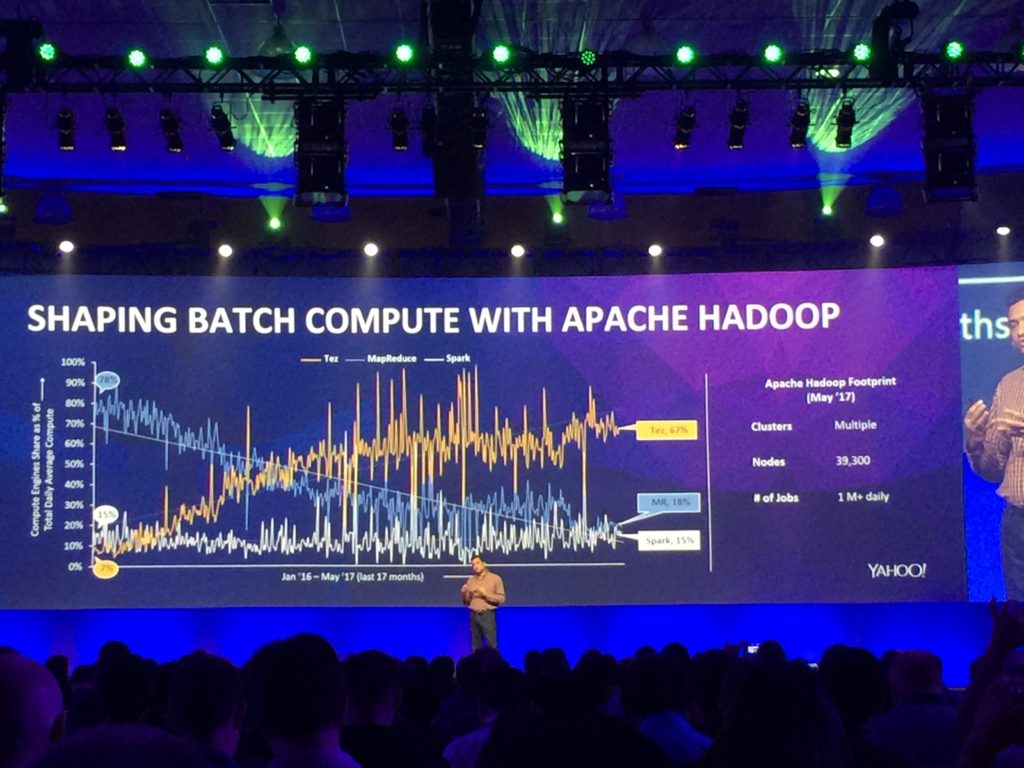

Make sure your real-time application can scale. Not just scale with large influxes of data, but independently with processing and storage. In the future of IoT and Streaming data sources will be extremely unpredictable. One day you might ingest 2 TB of new data and the next 2 PB. If you think I’m joking checkout my talk from the DataWorks Summit of the Future Architecture of Streaming Analytics. Build application on the foundation of architectures, services, and components that can scale. Remember our friend Murphy and his law about how things can go wrong.

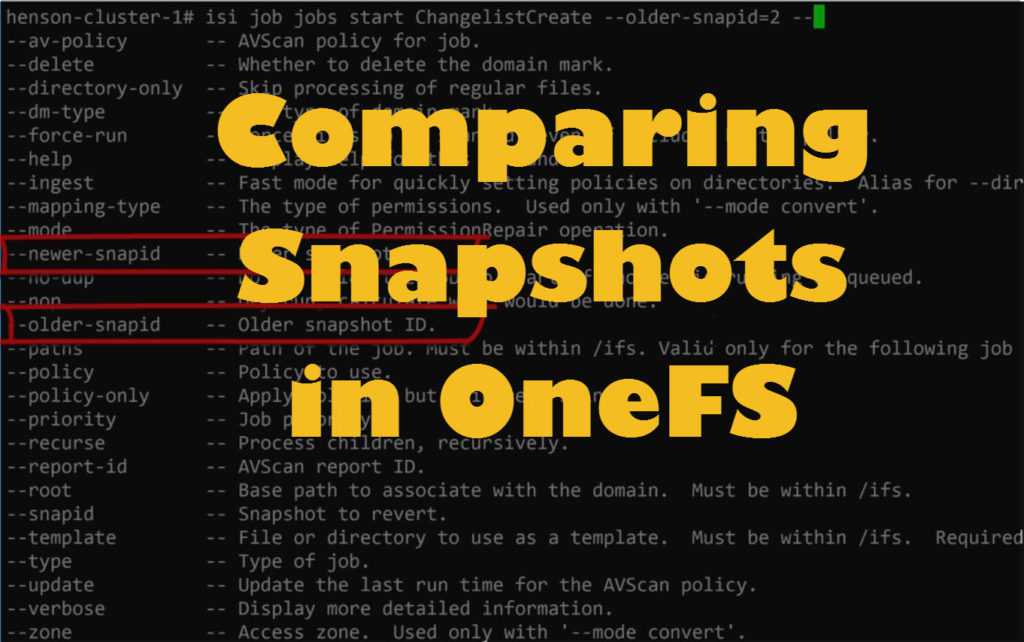

Scaling isn’t all focused on just being able to ingest more data, but scaling independently with compute and capacity. Make sure your real-time application supports a data lake strategy. Isilon’s Data Lake Platform give the ability to separate compute and capacity when growing your Hadoop clusters. So when a new set of data comes in that is 10 TB of data that isn’t really growing and probably will only run weekly or monthly you can scale your capacity without having to add unneeded capacity. Also, a data lake strategy gives you the ability to opt out of the 3x replication with 200% utilization vs. 80% utilization on Isilon. Whether you use Isilon or not make sure you have a data lake strategy that builds on the architecture of independent scaling!!

3 – Life Cycle Cost of Data

Since we know the value of the data decrease over time we need to assign a cost for that data. I know you probably just rolled your eyes when I mentioned the cost of data, but it’s important to understand that data is a product. Just like Amazon sells books for different prices, they also assign cost data’s value varies over time

As big data developers we want to hold on to data forever and bring in as many news sources as possible. However, when our manager or CFO gets the bill for all the capacity you need you will be sitting endless meetings and writing up justification reports for about why you are holding all this data. This means less time doing what we love, coding in our Hadoop Cluster. Know the value of your data and plan accordingly!!

Wrap Up of Real-time Analytics

Finishing up our discussion, remember that real-time analytics is processing of data as soon as the data is generated. By analyzing the data as it’s generated decision can be made quicker which helps create better applications for our users. When building real-time applications make sure you follow my 3 recommendations by understanding the time value of the data, building on systems that scale independently, and assigning value to the data. Successfully building real-time applications depends on these 3 core points.